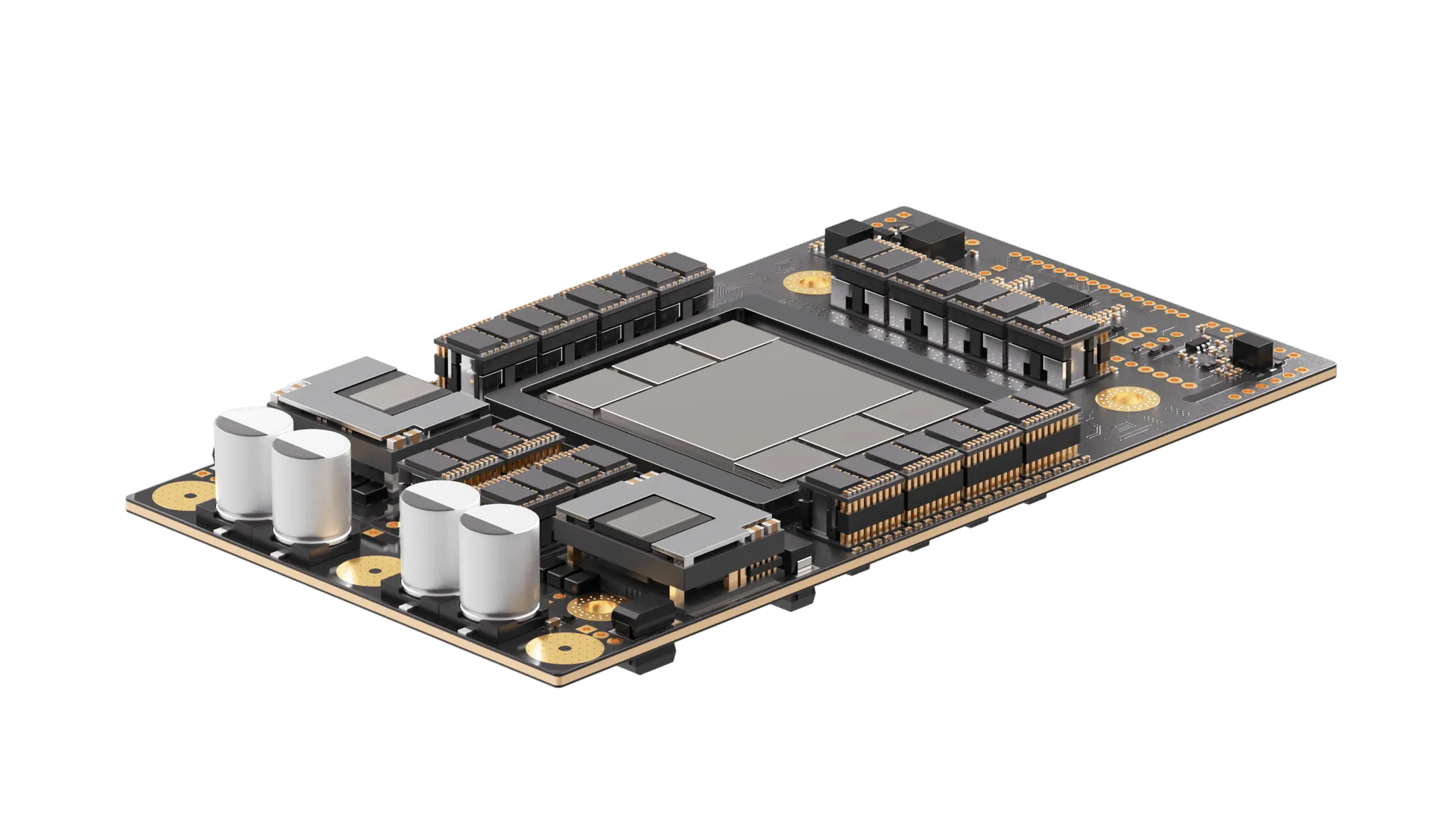

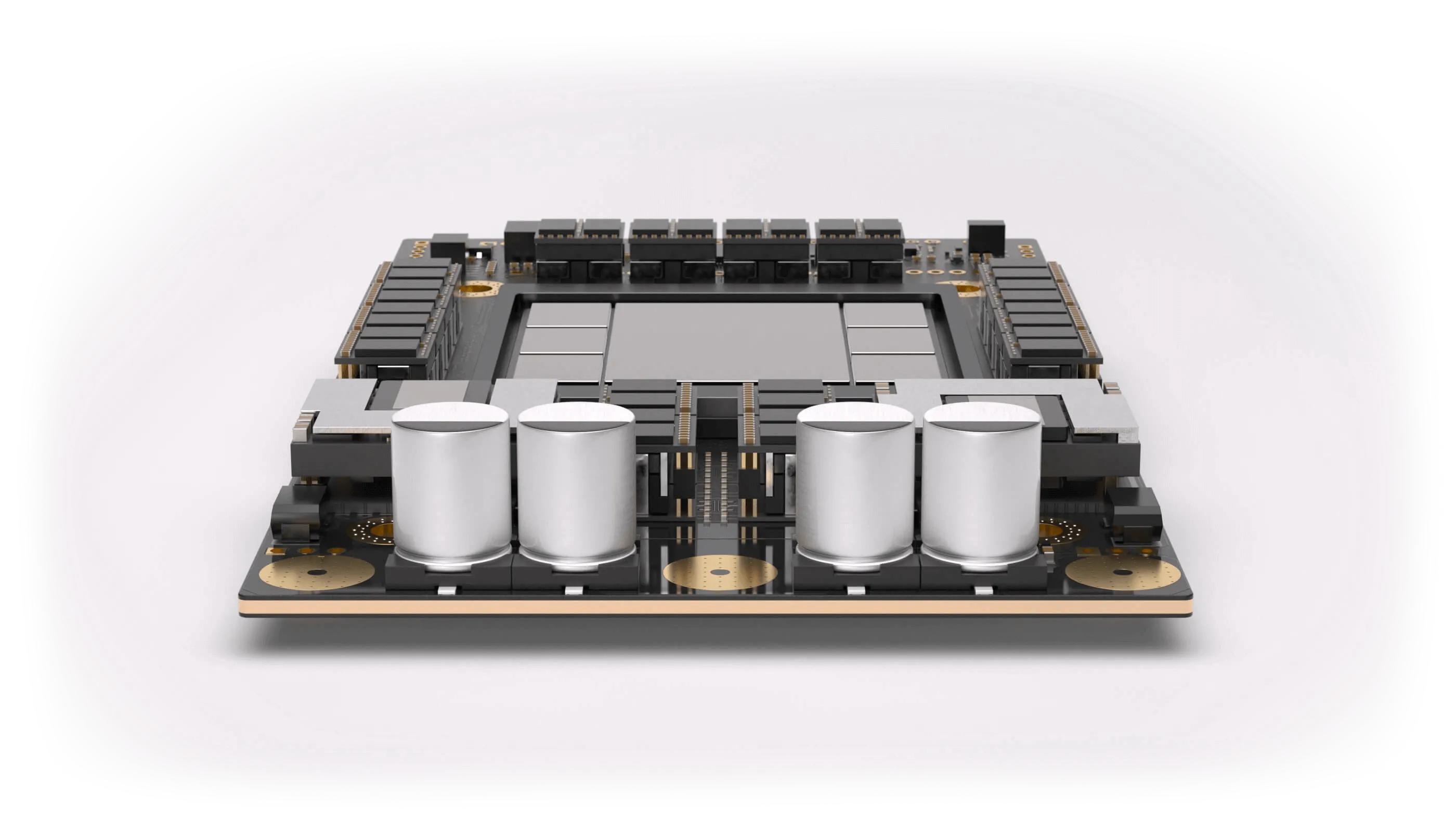

Meet the world's first transformer supercomputer

_Transformers etched into silicon

By burning the transformer architecture into our chips, we’re creating the world’s most powerful servers for transformer inference.

Tokens

per Second

per Second

NVIDIA

8xA100

8xA100

NVIDIA

8xH100

8xH100

Etched

8xSohu

8xSohu

_Build products that are impossible with GPUs

Real-time voice agents

Ingest thousands of words in milliseconds

Better coding with tree search

Compare hundreds of responses in parallel

Multicast speculative decoding

Generate new content in real-time

_Run tomorrow's trillion parameter models

Only one core

Fully open-source software stack

Expansible to 100T param models

Beam search and MCTS decoding

144 GB HBM3E per chip

MoE and transformer variants